Data Science Snippets: Ensemble Models- Part 7 - Boosting and Stacking

Ensemble models are techniques that combine multiple algorithms to enhance prediction accuracy, stability, and generalization in Machine Learning.

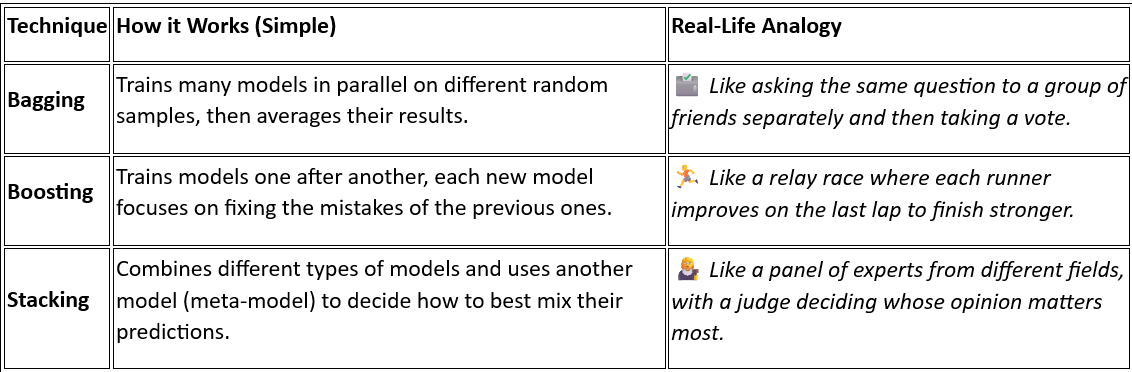

In the previous posts, we introduced Decision Trees. We also understood the types of Decision Trees and the different ways in which they split. We saw how a Decision Tree splits mathematically, and what pruning is. We took a sneak peek at the Random Forests. We delved deep into Random Forests. After Bagging, let’s look at two other ensemble techniques that make models smarter: Boosting and Stacking/Blending.

Boosting and Stacking: Two Ensemble Superpowers

Boosting

Builds models sequentially, each one learning from the mistakes of the previous.

Think of it as a relay race: every runner (model) improves on the last lap.

Great for improving accuracy on tough and noisy datasets.

Popular methods: AdaBoost, Gradient Boosting, XGBoost.

Stacking / Blending

Combines different types of models (trees, logistic regression, neural nets, etc.).

A meta-model learns how to best mix their predictions.

Think of it as a panel of experts: each brings a unique perspective, and the final decision balances them all.

Widely used in competitions and production for performance gains.

To conclude: Bagging vs Boosting vs Stacking/Blending

Up Next: We’ll unlock the magic of XGBoost and the art of Blending.

So stay tuned !!

Share this post with your friends and family, leave feedback, and don’t forget to hit Subscribe !!